Nov 09, 2023

Author(s)

In the burgeoning field of AI, large language models (LLMs) currently dominate the headlines, producing applications that span from writing assistance to conversational AI. The popularity of these models is driven by their ability to generate text that is not only coherent but also contextually relevant. Default LLM inference pipelines operate by choosing the next word with the highest probability. However, these greedy results often yield predictable outputs and lack variability, which might not be acceptable for all applications.

Understanding and manipulating the text generation strategies of language models can significantly increase the quality of the generated text. This blog covers how to fine-tune the output of LLMs, using the cutting-edge capabilities of DeepSparse—Neural Magic's CPU inference runtime that takes advantage of sparsity to accelerate neural network inference. We will explore pivotal parameters such as temperature, top_k, top_p, and repetition_penalty, which influence the text generation process. By adjusting these parameters, you can modify the output for a variety of use cases, ranging from creative writing to technical documentation.

By the end of this blog you will understand:

Temperatureparameter controls the creativity of the model.Top_kdictates the number of words that the model will sample the next word from.Top_pparameter allows the model to choose the optimal number of words dynamically.Repetition_penaltyparameter is used to ensure that the model doesn’t output duplicate text.

Continue reading to learn how to tweak these parameters for different use cases. You can also follow along using this notebook.

Temperature: Balancing Creativity and Coherence

When an LLM forecasts the next word in a sequence, it generates logits representing the potential next words. These logits are subsequently passed through a softmax function to create a probability distribution, which ideally adds up to one, allowing for the selection of the most probable word. This is where the temperature parameter comes into play.

Incorporating temperature into the softmax function can significantly alter the selection process. With a temperature setting of 1, the model adheres to the basic softmax output, striking a balance between randomness and predictability. But as we adjust this temperature, we effectively recalibrate the model's word selection— raising the temperature brings more varied and creative outputs, while a lower setting yields more predictable and conservative text.

To output more diverse responses from the LLM, just increase the temperature, applicable for use cases like creative writing. However, as you increase the temperature, you will notice that the responses from the LLM become more random and it may start making mistakes such as typos. Conversely, lowering the temperature makes the LLM more confident in its responses, which is desirable for applications such as translation, summarization, and RAG. Lowering temperature leads to more deterministic responses, ensuring consistency and clarity.

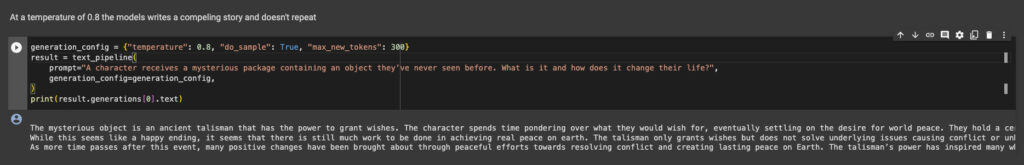

Here is an example showing how to apply the temperature parameter in creative writing using the DeepSparse text generation pipeline:

from deepsparse import TextGeneration

MODEL_PATH = "hf:neuralmagic/mpt-7b-chat-pruned50-quant"

text_pipeline = TextGeneration(model_path=MODEL_PATH, sequence_length=2048)

generation_config = {"temperature": 0.8, "do_sample": True, "max_new_tokens": 300}

result = text_pipeline(

prompt="A character receives a mysterious package containing an object they’ve never seen before. What is it and how does it change their life?",

generation_config=generation_config,

)

print(result.generations[0].text)

As seen in other examples from this notebook, using a temperature of 0.8 leads to a more coherent story that has no repetitions compared to a lower temperature of 0.1. It can be argued that the story is better because the model has a wider pool of words to choose from compared to when the temperature is lower.

Top K: Honing in on the Best Candidates

In top_k sampling, the top K words with the highest probabilities are chosen. For instance, imagine setting K to 50. In this scenario, the model zeroes in on the 50 most probable words it believes could follow the current text. It's within this subset that the model will apply the softmax function afresh, recalibrating the probabilities of these selected words and thus preparing to make its next move. The final selection is a stochastic process, adding an element of unpredictability within the confines of this top-tier group.

However, the choice of K is not one-size-fits-all. A smaller K tightens the model's focus, which leads to a higher likelihood that common words are chosen, potentially at the cost of variety and nuance. This can be especially useful in settings where precision and adherence to a specific fact are needed—think of tasks like question answering or data extraction, where the range of acceptable responses is typically narrow.

Selecting a high value for K is not desired for factual applications because the answer may be sampled from the lower probability words. However, for creative tasks, a higher value for K is better to make the word selection diverse. This can be particularly beneficial in fields such as storytelling or marketing copy generation, where the unexpected can engage the reader.

Here is an example to show you how to perform a translation task with the DeepSparse text generation pipeline and a top_k value of 50:

from deepsparse import TextGeneration

MODEL_PATH = "hf:neuralmagic/mpt-7b-chat-pruned50-quant"

text_pipeline = TextGeneration(model_path=MODEL_PATH, sequence_length=2048)

generation_config = {"top_k": 50, "max_new_tokens": 300}

result = text_pipeline(

prompt="Translate the following sentence to French `Today is a good day to go out and play football because it is sunny. After that, you can consider visiting the national park for a nature walk while seeing some wild animals.`",

generation_config=generation_config,

)

print(result.generations[0].text)

"""Il est bon de sortir et jouer au football parce qu’il est jour de soleil. Après cela, il est possible de visiter le parc national pour une balade dans la nature où il est possible de rencontrer certes animaux sauvés"""

The problem with top_k sampling is that the number of words has to be chosen manually. A better strategy is to let the model choose the most optimal number of words.

Top P: Choosing the Best Candidates Dynamically

In top_p sampling, the number of words is chosen dynamically as long as their total cumulative probability is greater than or equal to p. For example, if you choose p as 0.8, the model chooses the highest probability words until their total probability exceeds 0.8. For instance, the choices can be 0.5 + 0.3 or 0.4 + 0.3 + 0.2.

In top_p, sampling is done from the smallest set of words whose cumulative probability is more than p, making the total number of words in the pool dynamic.

Therefore, top_p sampling chooses the most probable words without manually setting the value of K. The words will be chosen dynamically until the desired probability is exceeded. With this approach, the number of words can increase or decrease dynamically.

Here is an example showing how to use DeepSparse and LangChain to build RAG applications with a top_p value of 0.92:

from langchain.llms import DeepSparse

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Chroma

from langchain.chains import RetrievalQA

from langchain.document_loaders import TextLoader, DirectoryLoader

DATA_PATH = "docs"

loader = DirectoryLoader(DATA_PATH, glob="*.txt", loader_cls=TextLoader)

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

texts = text_splitter.split_documents(documents)

embeddings = HuggingFaceEmbeddings(

model_name="sentence-transformers/all-MiniLM-L6-v2", model_kwargs={"device": "cpu"}

)

docsearch = Chroma.from_documents(texts, embeddings)

generation_config = {

"top_p": 0.92,

"max_new_tokens": 300,

"do_sample": True,

}

model_config = {"sequence_length": 2048}

llm = DeepSparse(

model=MODEL_PATH, model_config=model_config, generation_config=generation_config

)

chain = RetrievalQA.from_chain_type(

llm,

chain_type="stuff",

return_source_documents=True,

retriever=docsearch.as_retriever(),

)

res = chain({"query": "What are some Dutch breakfast options?"})

answer = res["result"]

print(answer)

# Beschuit (crispbakes) can be topped with various fruits or whipped cream for dessert

You don’t have to worry about the number of words when you set the desired probability for the RAG application because the model will choose the optimal number of words dynamically.

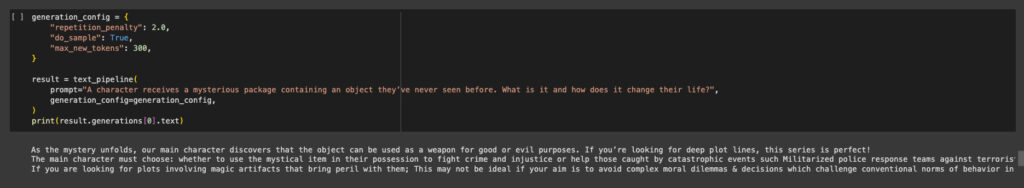

Repetition Penalty: Ensuring No Duplicate Text

Repetition in LLMs is mostly caused by the greedy approach of choosing the next token, where the highest probability token is always selected. These repetitions can be prevented by introducing a penalty that discounts the scores of tokens that have been generated before.

A repetition penalty helps the model generate more diverse content instead of repeating previous phrases. Repetition is prevented by applying a high penalty to phrases or words that tend to be repeated.

With no repetition penalty, the model repeats the phrase “As the character excitement and wonder” for the creative writing task in the example notebook. However, by setting the penalty to 2, the repetition stops:

from deepsparse import TextGeneration

MODEL_PATH = "hf:neuralmagic/mpt-7b-chat-pruned50-quant"

text_pipeline = TextGeneration(model_path=MODEL_PATH, sequence_length=2048)

generation_config = {

"repetition_penalty": 2.0,

"do_sample": True,

"max_new_tokens": 300,

}

result = text_pipeline(

prompt="A character receives a mysterious package containing an object they’ve never seen before. What is it and how does it change their life?",

generation_config=generation_config,

)

print(result.generations[0].text)

Conclusion

The DeepSparse text generation pipeline allows you to configure text generation parameters for various use cases. Whether you are building a custom application using the pipeline or building a CPU-powered chat application with LangChain, the DeepSparse interface has you covered.

Neural Magic would love to see the LLM applications you build with DeepSparse on CPU. Join us on Slack to share what you’re working on. You can also ask us any questions on Slack or submit an issue on GitHub. Check out the notebook with all the examples shown in this blog.