Aug 19, 2021

Author(s)

Neural Magic has been busy this summer on the Community Edition (CE) of our DeepSparse tools; we’re excited to share highlights of releases 0.5 and 0.6. The full technical release notes are always found within our GitHub release indexes linked from our Docs website or the specific Neural Magic repository.

For user help or questions about anything of these highlights, sign up or log in: Deep Sparse Community Discourse Forum and/or Slack. We are growing the community member by member and are happy to see you there.

Sparse Model Tutorials, Recipes, Examples, and Downloads, Oh My!

So users can try out and achieve success with Neural Magic’s sparsified models, a growing number of “model overview pages” have been created, starting with ResNet-50, MobileNetV1, YOLOv5, YOLOv3, and BERT.

Model pages will point you to tutorials, examples, recipes, and downloads that allow you to:

- Compare the differences between the models for both accuracy and inference performance

- Run the models for inference in deployment or applications

- Train the models on new datasets

Announcing sparsezoo.neuralmagic.com

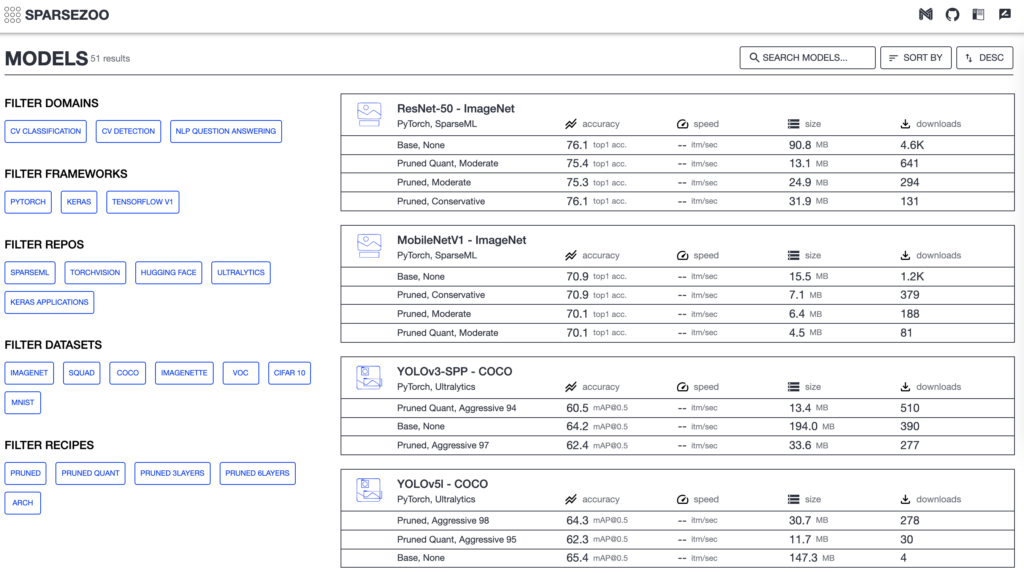

The SparseZoo, our neural network model repository for highly sparse and sparse-quantized models with matching sparsification “recipes,” now has an alpha website. Visitors can visually interact with available models by filtering on domain, frameworks, repositories, datasets, or recipes (encoded directions for how to sparsify a model into a simple, easily editable format). Various attributes are displayed about available models as well as model actions that allow users to train and deploy a given model on your own data.

YOLOv5 Webinar: August 31

Learn about techniques we used to sparsify YOLOv5 for a 10x increase in performance and 12x smaller model files. Discover how to reproduce our benchmarks and how to train YOLOv5 on new, private datasets. View webinar recording here.