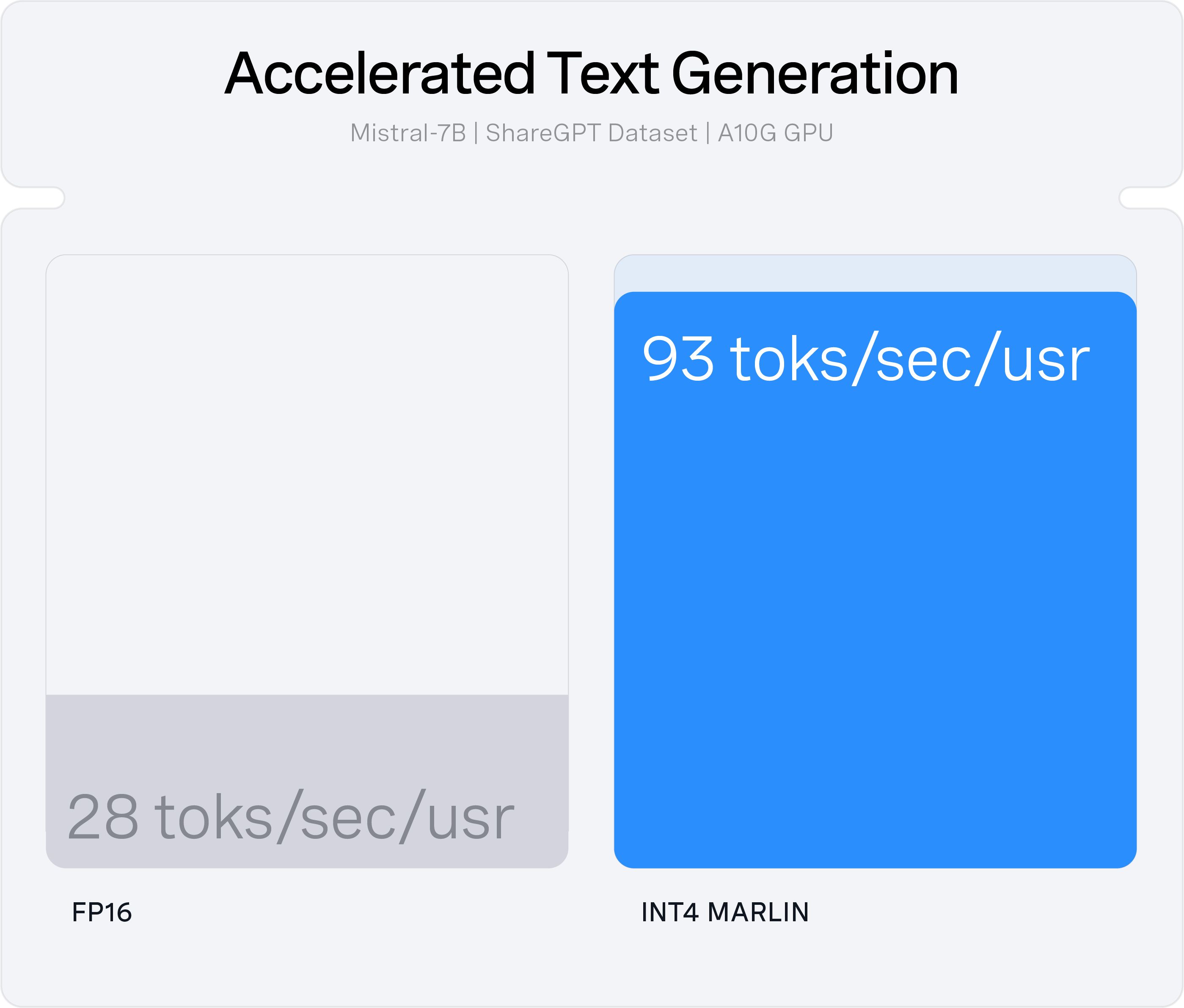

Performance and Efficiency

Accelerate performance while maximizing the efficiency of underlying hardware with model optimization tools and inference serving.

why neural magic

Streamline your AI model deployment while maximizing computational efficiency with Neural Magic, as your inference server solution.

Significantly reduce infrastructure costs and complexity, as our solutions easily integrates with existing hardware, like CPUs and GPUs. Our optimization techniques provide fast inference performance, enabling your AI applications to deliver real-time insights and responses with minimal latency.

Stay ahead in today's rapidly evolving business landscape. Deploy your AI models in a scalable and cost-effective way, across your organization and unlock the full potential of your models.

Research evidence

In collaboration with the Institute of Science and Technology Austria, Neural Magic develops innovative LLM compression research and shares impactful findings with the open source community, including the state-of-the-art Sparse Fine-Tuning technique.

Business Advancements

1

Performance and Efficiency

Accelerate performance while maximizing the efficiency of underlying hardware with model optimization tools and inference serving.

2

Privacy

Keep your model, your inference requests and your data sets for fine-tuning within the security domain of your organization.

3

Flexibility

Bring AI to the data and your users, through the location of your choice, across cloud, datacenter, and edge.

4

Control

Deploy within the platforms of your choice, from Docker to Kubernetes, while staying in charge of the model lifecycle to ensure regression-less upgrades.

Learning & Impact