Feb 20, 2025

This blog recaps the February 6th vLLM Office Hours, where our host Michael Goin was joined by Roger Wang, a vLLM committer from Roblox, to discuss the new multimodal capabilities in vLLM V1.

vLLM V1: Accelerating Multimodal Inference for Large Language Models

In the AI space, efficient inference isn’t just about speed, it’s about flexibility, scalability, and the ability to seamlessly handle diverse data modalities - beyond just text. vLLM has emerged as the open source standard for serving language model inference, supporting models from Hugging Face and beyond across a wide array of hardware. With robust support for GPUs, TPUs, and even CPUs, vLLM is paving the way for next-generation multimodal applications.

In this article, we dive into the innovations behind vLLM V1 (V1 Alpha), which addresses the challenges of multimodal inference encountered in V0. We’ll explore the design decisions that enhance performance, from encoder caching to optimized data processing and share benchmark results that highlight the improvements. Finally, we’ll outline our vision for future work to further push the boundaries of efficient, scalable AI.

About vLLM

vLLM is the go-to open source model serving framework for LM inference. Its design emphasizes:

- Speed and Ease of Use: vLLM works out-of-the-box with models from Hugging Face and supports dozens of key models.

- Hardware Versatility: Built on PyTorch, vLLM isn’t limited to Nvidia GPUs, it extends support to AMD GPUs, Google TPUs, AWS Accelerators, Intel accelerators, and even CPUs.

- Beyond Text-Only Models: Today’s applications demand multimodal capabilities. vLLM now supports not only text but also images, audio, and video inputs—enabling tasks like document parsing, object recognition, video understanding, and computer-use.

- Advanced Inference Optimizations: With features like quantization, chunked prefill, and prefix caching, vLLM is continually optimized for both high-throughput and low-latency inference.

Overview of Large Multimodal Models

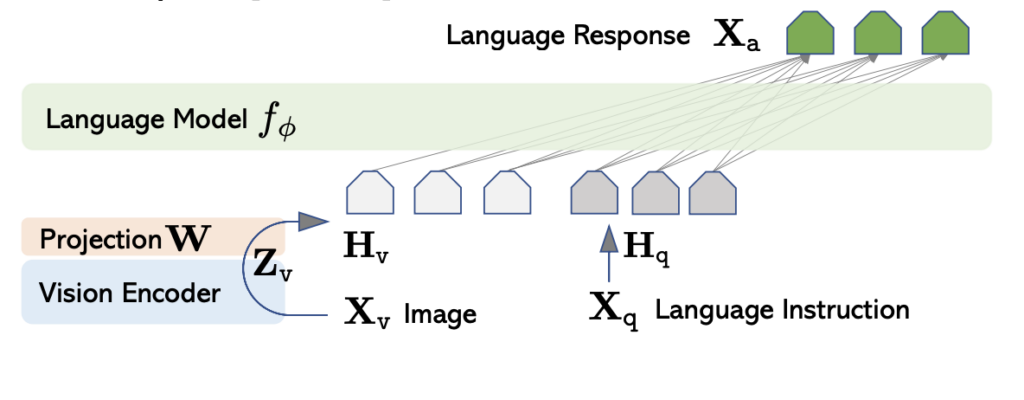

Modern large multimodal models typically leverage a decoder-only language model (LM) backbone paired with an encoder for non-text modalities. In practice, when you provide an image or audio clip, it’s first transformed into embeddings by a dedicated encoder. These embeddings are then merged with text embeddings and fed into the decoder LM.

Source: https://encord.com/blog/llava-large-language-vision-assistant/

For example:

- LLaVA: Uses CLIP to encode images into embeddings before merging them with text.

- Qwen2-Audio: Uses a Whisper audio encoder to process audio inputs, which are then merged with text embeddings for decoding.

vLLM’s flexible architecture now supports this diverse range of inputs, setting the stage for richer, more capable multimodal applications.

What Went Wrong in vLLM V0

While vLLM V0 set the foundation, it wasn’t without limitations, especially when dealing with multimodal inputs:

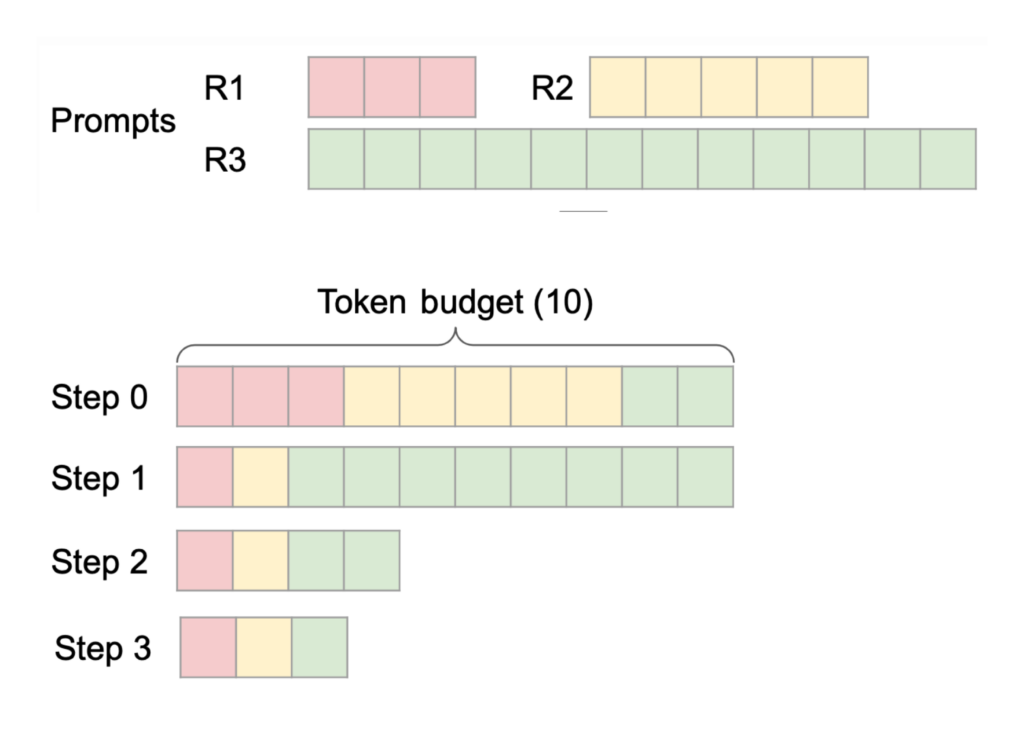

Chunked Prefill Challenges

Chunked prefill allows prompts to be partially prefilled so that long requests don’t block the entire decoding process of existing requests. For example, with three incoming requests (R1, R2, R3), R1 and R2 might be fully prefilled, while only a portion of R3 is prefilled initially. This staggered approach keeps latency in check.

However, multimodal embeddings are continuous by nature and cannot be broken into discrete tokens to be incrementally produced. If an image produces 10 embeddings but only 2 tokens are reserved in a prefill chunk, a shape mismatch occurs. Early designs assumed a direct merge into text embeddings, which proved problematic.

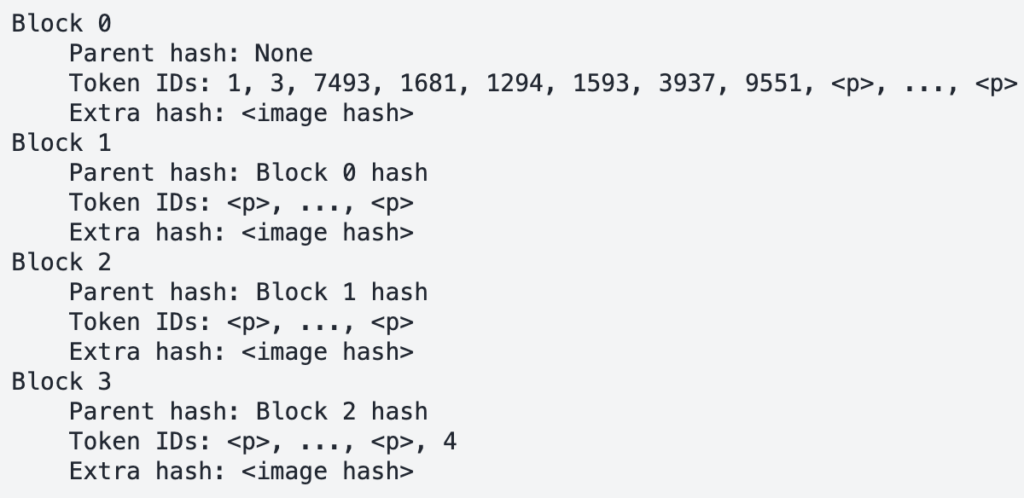

Prefix Caching Limitations

In V0, prefix caching was based solely on token IDs. For multimodal inputs, where placeholder tokens (e.g., <image>) are identical across requests, this led to cache collisions. Different images sharing the same placeholder would mistakenly trigger cached results, compromising correctness.

Innovations in vLLM V1

vLLM V1 introduces several key improvements to overcome these challenges:

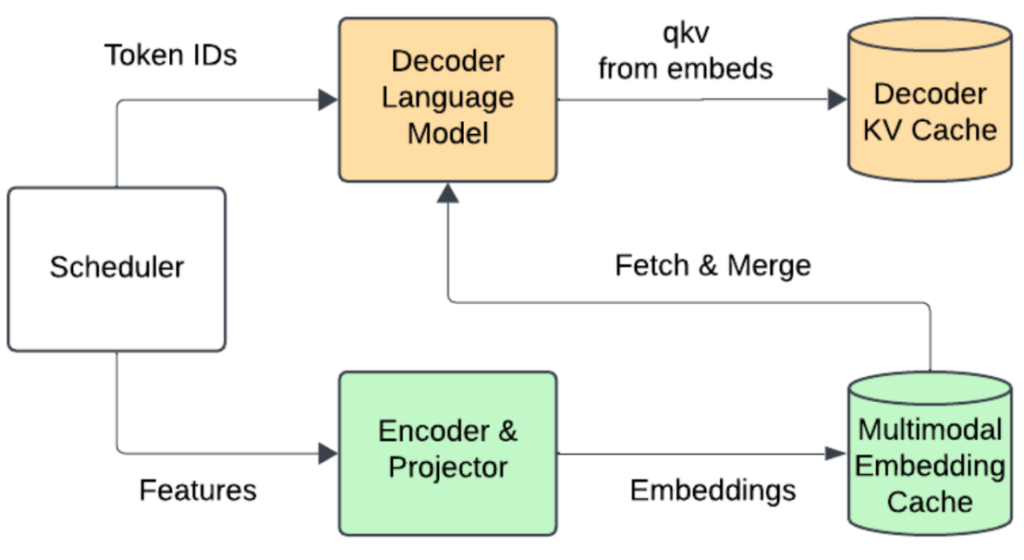

1. Encoder Cache and Encoder-Aware Scheduler

The Challenge:

Repeatedly regenerating multimodal embeddings for every prefill operation can be inefficient, especially when a single image may generate thousands of embeddings (e.g., Pixtral produces 4096 embeddings for a single 1024x1024 image).

The V1 Solution:

- Encoder Cache: Multimodal embeddings are computed once and stored directly on the GPU.

- Encoder-Aware Scheduler: The scheduler tracks the positions of multimodal embeddings within each request. When merging with text embeddings, it retrieves cached data, eliminating redundant encoder execution.

Why a GPU Cache?

Transferring tensors to and from CPU memory is often more expensive than re-executing the encoder. Keeping the cache on the GPU minimizes latency.

2. Enhanced Prefix Caching with Metadata

To address the shortcomings of token-ID–based caching, V1 incorporates additional metadata, such as hashes of images or audio chunks, into the caching mechanism. This ensures that even if placeholder tokens are identical, the underlying multimodal content is correctly distinguished.

3. Optimized Multimodal Data Processing

In V0, converting raw data (e.g., PIL images) to tensors was a blocking CPU operation, often stalling GPU kernels. V1 tackles this by decoupling the processes:

- Process 0 (CPU): Handles input processing and raw data conversion.

- Process 1 (GPU): Executes the forward pass independently.

This asynchronous pipeline ensures that heavy CPU operations do not block GPU performance, leading to significant latency reductions.

4. Multimodal Feature Caching

Beyond prefix caching, V1 introduces feature caching for raw data conversion:

- Dual Mirror Caches: Both CPU and GPU processes maintain mirrored caches on CPU memory, minimizing data transfers.

- Efficient Hashing: Using consistent hashes for raw data allows the system to skip redundant conversions, improving throughput in both online and offline scenarios.

Benchmark Results

vLLM V1’s improvements have been validated across two key scenarios:

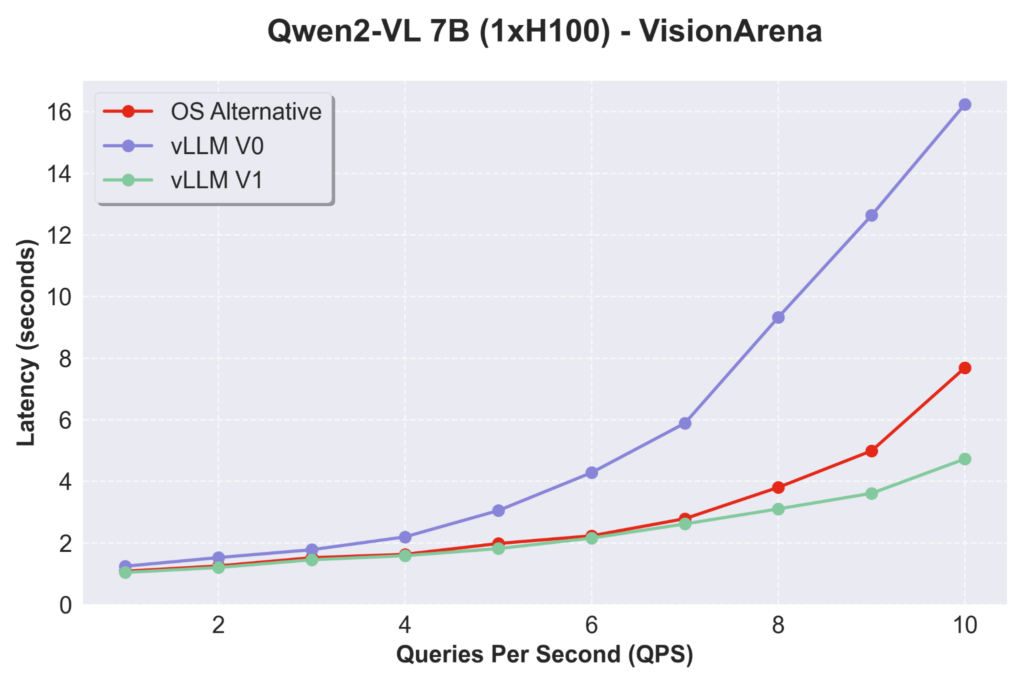

Online Serving

Using the Qwen2-VL 7B model on the VisionArena dataset - a real-world vision QA benchmark - vLLM V1 demonstrates:

- Low Latency at High QPS: While differences are subtle at low QPS, at higher throughput, V1 significantly outperforms V0.

- Competitive Edge: When compared with other open source alternatives, V1 maintains superior performance in high QPS regimes.

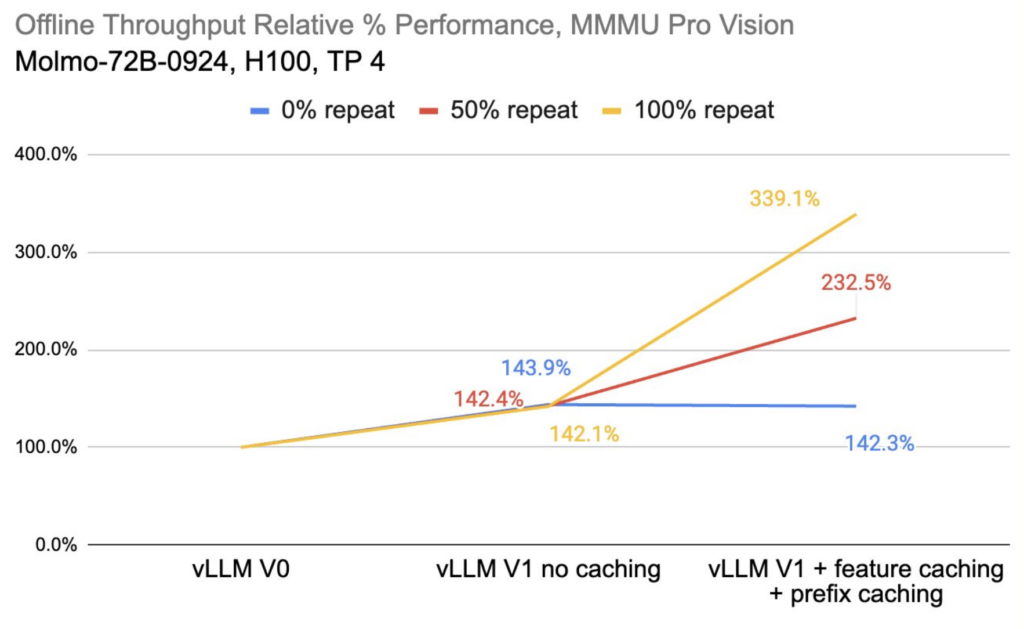

Offline Inference

For offline inference, we benchmarked using the MMMU Pro Vision dataset with the Molmo-72B model using 4xH100s:

- Throughput Gains: vLLM V1, even without caching, shows around a 40% performance boost over V0.

- Caching Benefits: With both prefix and feature caching enabled, scenarios with repeated requests (up to 100% repeat) experience dramatic throughput improvements. Even for unique prompts, the overhead is minimal compared to the benefits.

Future Work

vLLM V1 is a significant step forward, but there’s more on the horizon:

- Expanding Multimodal Model Support: Not all multimodal models are supported in V1 yet. Look for the updated support list indicating green checks for full compatibility.

- Optimizing Mixed Modality Inference: Future enhancements will address use cases that combine multiple modalities (e.g., text with audio and images).

- Multimodal Output Generation: We plan to support autoregressive multimodal generation - extending beyond text output to audio, video, and image outputs (e.g., OpenBMB MiniCPM-O, Nvidia Cosmos, DeepSeek Janus-Pro).

- Multimodal Input Streaming: Upcoming features will enable real-time streaming of video and audio inputs, allowing dynamic prefill and improved interactive experiences.

Conclusion

vLLM V1 marks a pivotal upgrade in serving large, multimodal language models. By addressing the challenges of chunked prefill, enhancing caching mechanisms, and optimizing data processing pipelines, V1 delivers lower latency, higher throughput, and robust performance across diverse hardware platforms.

Neural Magic (now part of Red Hat) is proud to be a top commercial contributor to vLLM, driving these innovations forward and empowering the community with open, efficient, and scalable AI solutions. We invite you to explore vLLM V1, experiment with our open source tools, and join us in shaping the future of multimodal inference.

For more information and to get started with vLLM visit the GitHub repository. See more on the support vLLM provides for multi-modal models here.

Feel free to reach out with questions or to share your feedback as we continue to evolve vLLM!