About Us

AI Model Optimization and Accelerated Inference Serving

Our deep expertise has led us to invent the industry's state-of-the-art methods for model quantization and sparsification, and inference acceleration algorithms.

About Us

Our deep expertise has led us to invent the industry's state-of-the-art methods for model quantization and sparsification, and inference acceleration algorithms.

Our Story

Neural Magic is a series-A company with a mission to enable enterprise deployment of open-source machine learning models across edge, datacenter, and cloud. Our deep expertise across AI model optimization and high-performance computing has led us to invent the industry's state-of-the-art techniques for model quantization and sparsification, and inference server acceleration. With this we provide enterprise-grade inference server solutions under subscription that deliver maximum speed and efficiency across both GPUs and CPUs. Neural Magic is headquartered in Somerville, MA, and was founded in 2018 by MIT professor Nir Shavit and MIT Research Scientist Alex Matveev.

Total Funding

Founded

Patents

Research Papers

FOCUS

Open and Transparent Innovation

We believe in and support the open source AI community, as a collaborative environment for developers, researchers, and organizations to explore, contribute and benefit from collective contributions and shared knowledge to fuel the future direction and innovation of AI.

The Art of the Possible

As a research-driven company, we are not bound by the impossible. Instead we nurture a culture where anything is possible led by curiosity and driven by customer obsession to ensure the use of AI is impactful and beneficial.

Humility

In today’s dynamic landscape of AI, where innovations and challenges arise and change rapidly, humility encourages our team to acknowledge limitations and embrace feedback and input from others. For Neural Magic, humility promotes a culture of respect and inclusivity where all team member’s contributions are valued, regardless of their position or expertise.

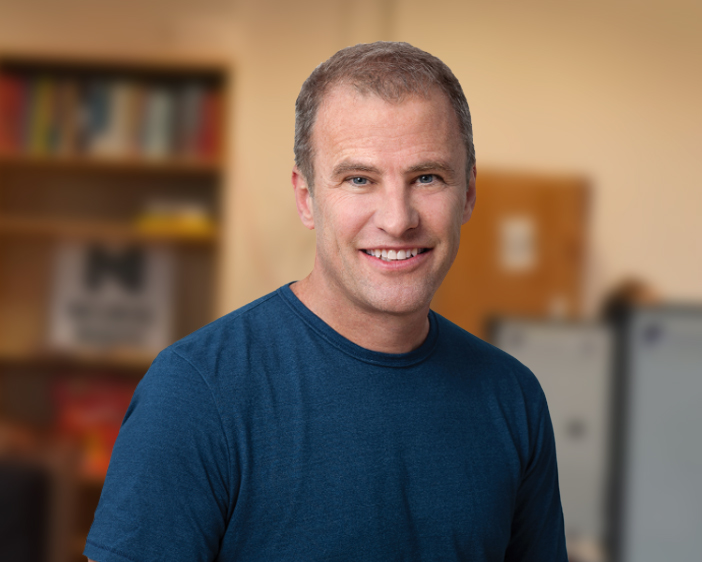

Innovators

CEO

Former VP of Product and CTO of Google Cloud, former CTO and EVP of Worldwide Engineering for Red Hat

Co-Founder

MIT Professor of Electrical Engineering and Computer Science, ACM Fellow

Principal Research Scientist

Professor at IST Austria with a research focus on high-performance algorithms for machine learning

Teamwork

At Neural Magic, we believe great things happen when talented people come together with a shared vision and a relentless drive to make a difference. Our diverse team at Neural Magic is a dedicated group of engineers, researchers and professionals who bring passion, creativity, and expertise to everything they do. Spanning the globe in 10 countries, across 3 continents, in 7 time zones, our team is committed to pushing the boundaries of AI to help customers succeed with their business goals.