Efficiency Up, Cost Down

Reduce hardware requirements needed to support AI workloads with more efficient inferencing on the infrastructure you already own.

why neural magic

Streamline your AI model deployment while maximizing computational efficiency with Neural Magic as your enterprise inference server solution.

Our inference server solutions support the leading open-source LLMs across a broad set of infrastructure so you can run your model wherever, securely, whether that’s in the cloud, a private data center or at the edge.

Deploy your AI models in a scalable and cost-effective way across your organization and unlock the full potential of your models.

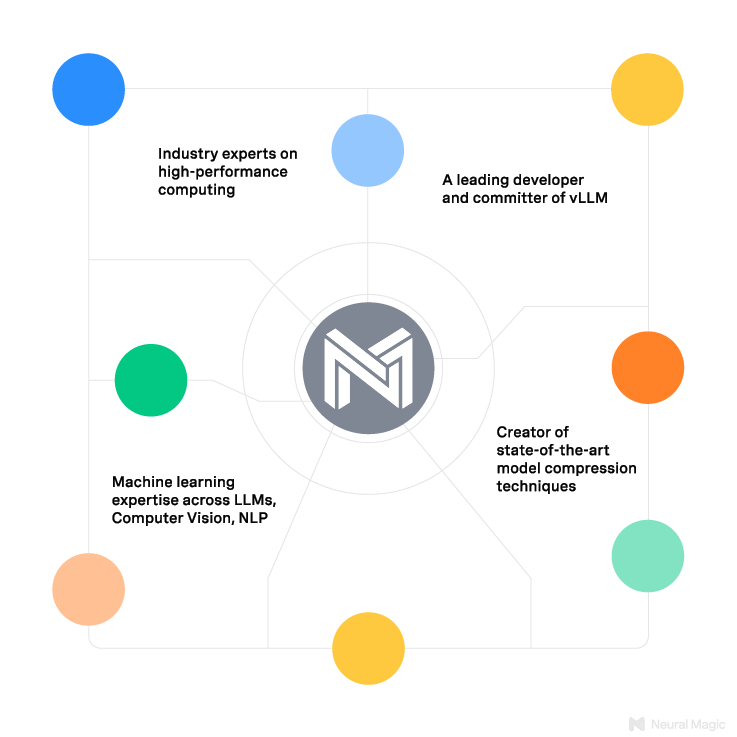

Who is Neural Magic?

Neural Magic is a unique company consisting of machine learning, enterprise, and high performance computing experts.

We’ve developed leading enterprise inference solutions that maximize performance and increase hardware efficiency, across both GPUs and CPU infrastructure. We sweat the details, with our inference optimizations taking us deep into the instruction level details across a broad set of GPU and CPU architectures.

As machine learning model optimization experts, we can further increase inference performance through our cutting edge model optimization techniques.

Our inference serving expertise means IT teams responsible for scaling open-source LLMs in production now have a reliable, supported inference server solution and enterprises can deploy AI with confidence.

From Research to Code

In collaboration with the Institute of Science and Technology Austria, Neural Magic develops innovative LLM compression research and shares impactful findings with the open source community, including the state-of-the-art GPTQ and SparseGPT techniques.

Explore

Enterprise inferencing system for deployments of open-source large language models (LLMs) on GPUs.

Business Benefits

1

Efficiency Up, Cost Down

Reduce hardware requirements needed to support AI workloads with more efficient inferencing on the infrastructure you already own.

2

Privacy and Security

Keep your model, your inference requests and your data sets for fine-tuning within the security domain of your organization.

3

Deployment Flexibility

Bring AI to the data and your users, through the location of your choice, across cloud, datacenter, and edge.

4

Control of the Model Lifecycle

Deploy within the platforms of your choice, from Docker to Kubernetes, while staying in charge of the model lifecycle to ensure regression-less upgrades.

Learning & Impact